|

Middleware

v2.3.0

|

|

Middleware

v2.3.0

|

The Tracking Algorithm uses internal sensors to track the 3D motions of every thimble on the space. Some other sensors (ToF-Time of Flight sensors) have been introduced to compensate for the drift from which IMU sensors suffer.

Through the Windows application WEART Middleware, you have the option to export the raw sensor data from your running session into a log file, even if using two TouchDivers simultaneously.

To simulate finger motions in ground truth tracking systems or to fine-tune external tracking algorithms, recorded data can be used to simulate the accuracy of the system.

The TouchDiver's sensors need to be properly calibrated to provide optimal motion tracking. The offsets detected in all IMU type sensors are corrected during our product's manufacture stage. Since the offsets change over time due to the environment in which it is used or due to physical stress on the sensors, we introduced the possibility to perform the calibration whenever it would be necessary in our software.

We use IMU (Inertial Measurement Unit) sensors, thus calibration of these sensors is required to achieve higher accuracy in tracking finger movements.

Calibration procedures can be performed whenever necessary since sensor offsets change over time as a result of the environment in which they operate or physical stress on the sensors.

First of all, just connect one device at a time to Middleware; otherwise, it won't be able to calibrate many devices simultaneously.

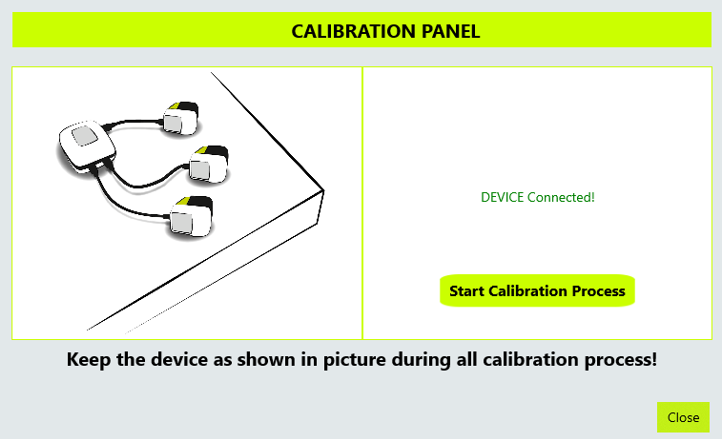

While the device is connected, from Settings Icon in the Middleware UI, press Go to Panel button related to device calibration. The following panel will open.

You can click Start Calibration Process if your device has been placed, as in the image,on a flat surface. The procedure that automatically determines all of the device's sensor offsets will start after confirmation. A notification stating whether the calibration was successful or unsuccessful will be shown after 30 seconds.

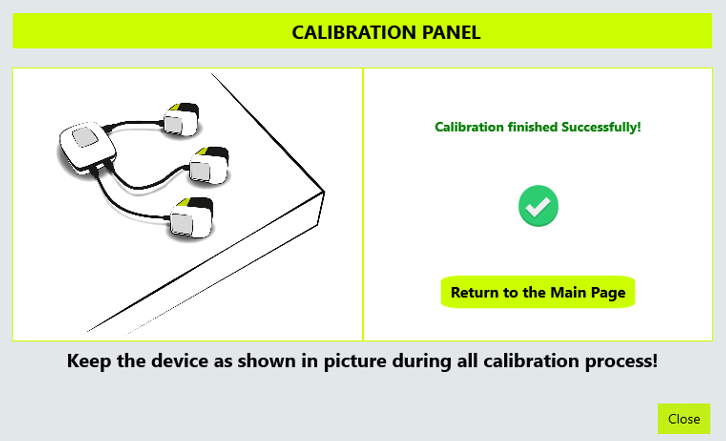

The routine is successfully completed if the following information is displayed once the calibration procedure is ended.

Otherwise, a notification will appear if the procedure fails, briefly describing the cause of the failure.

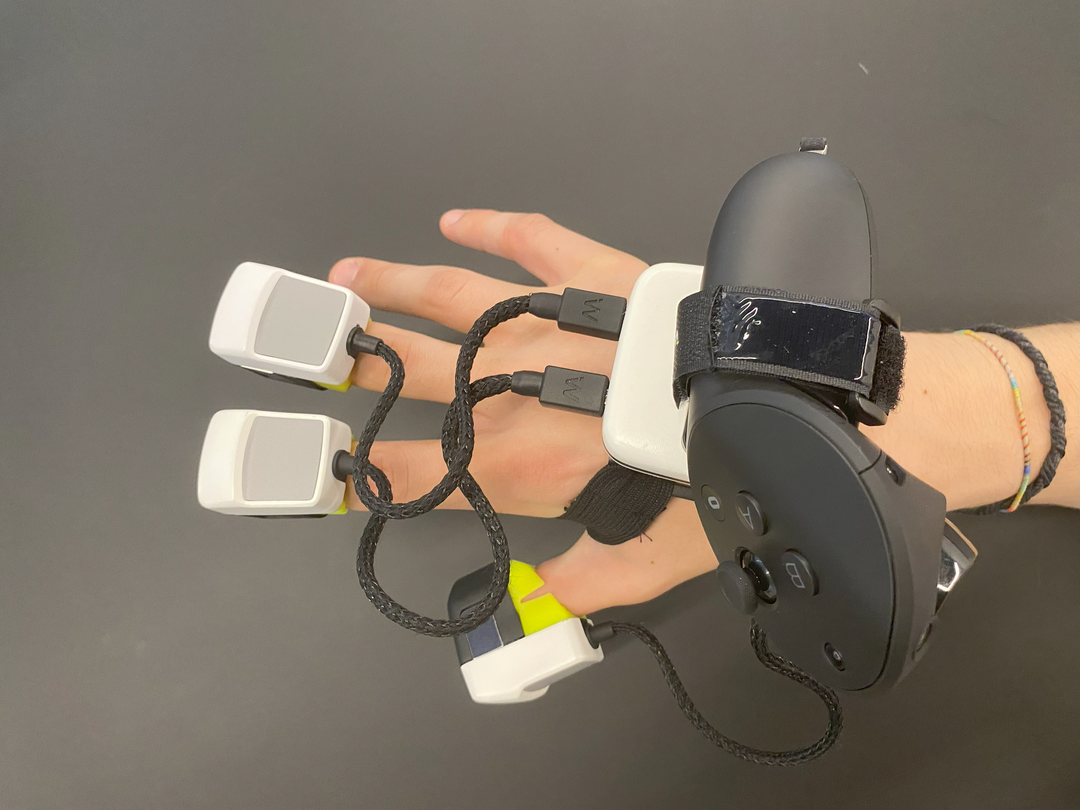

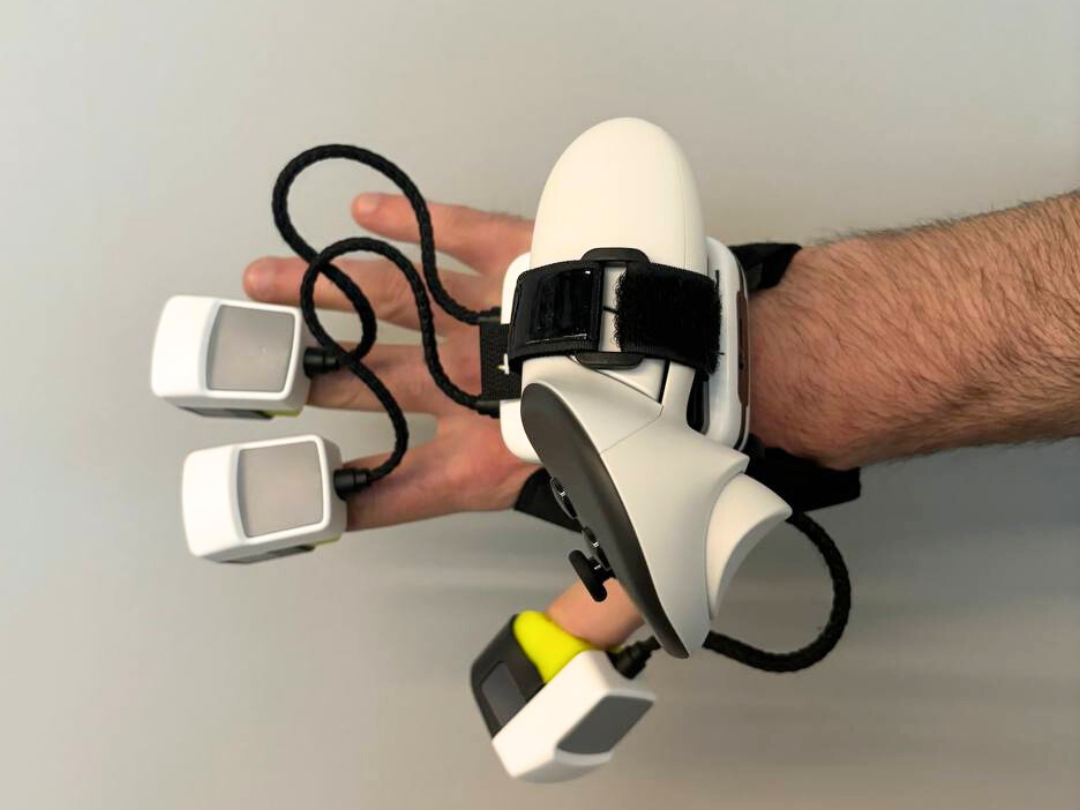

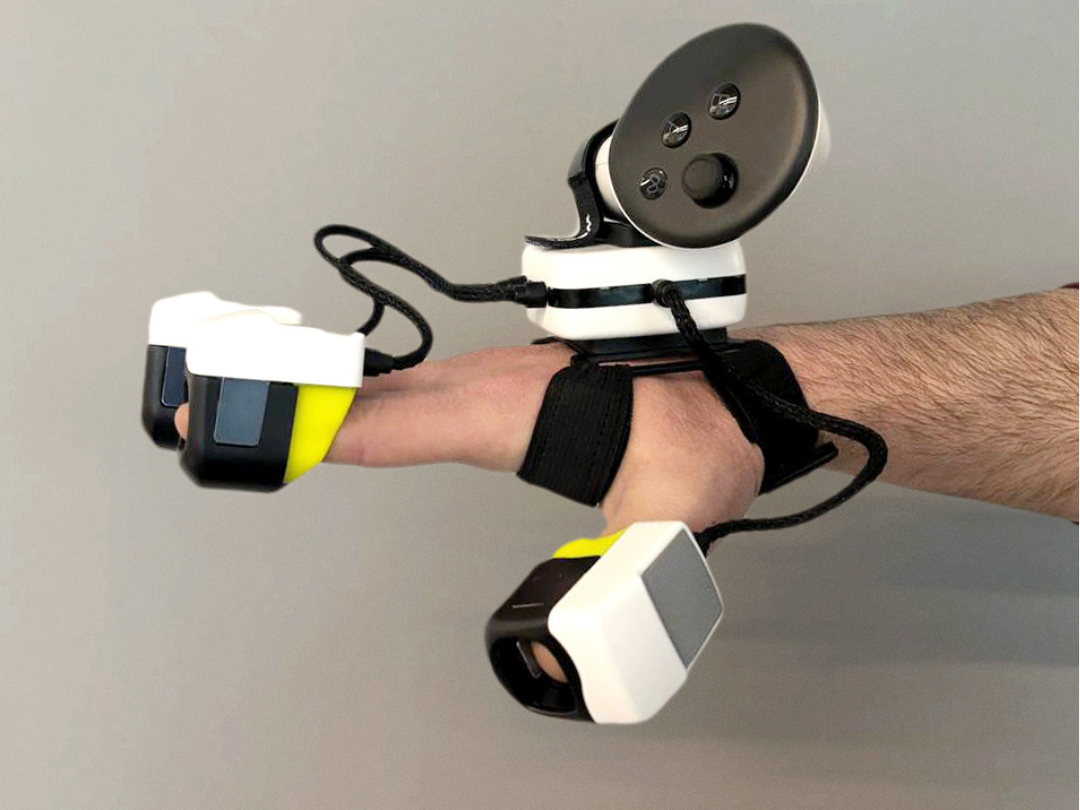

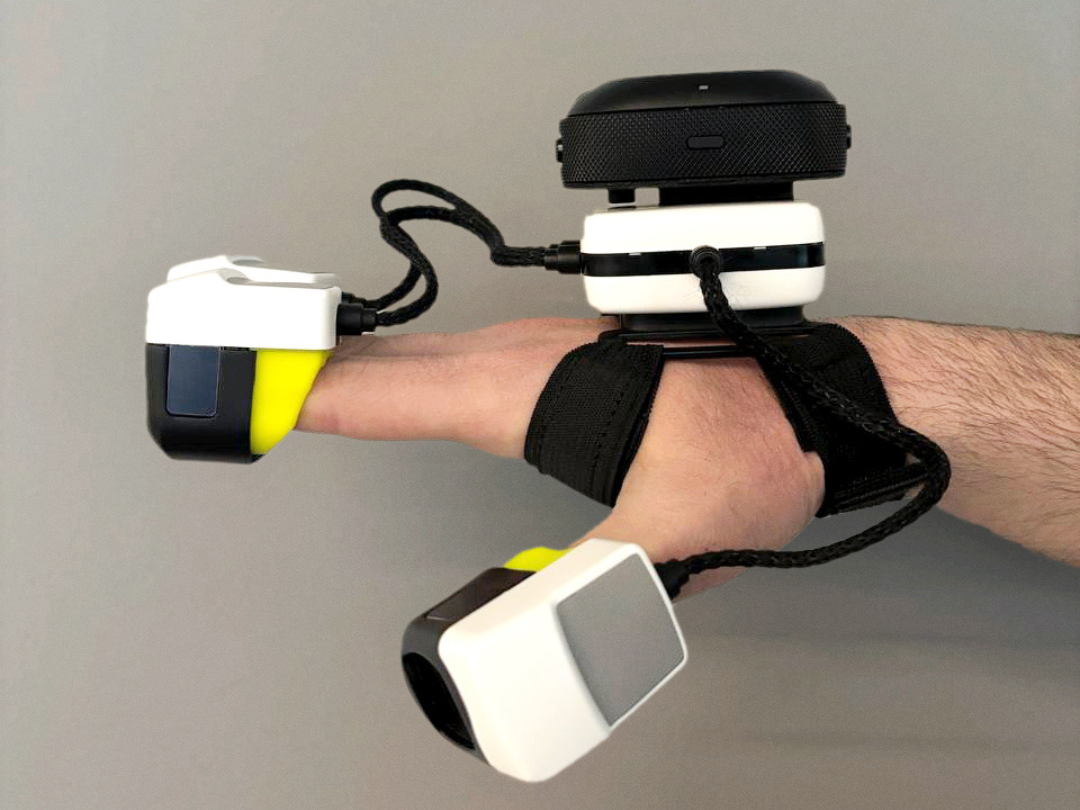

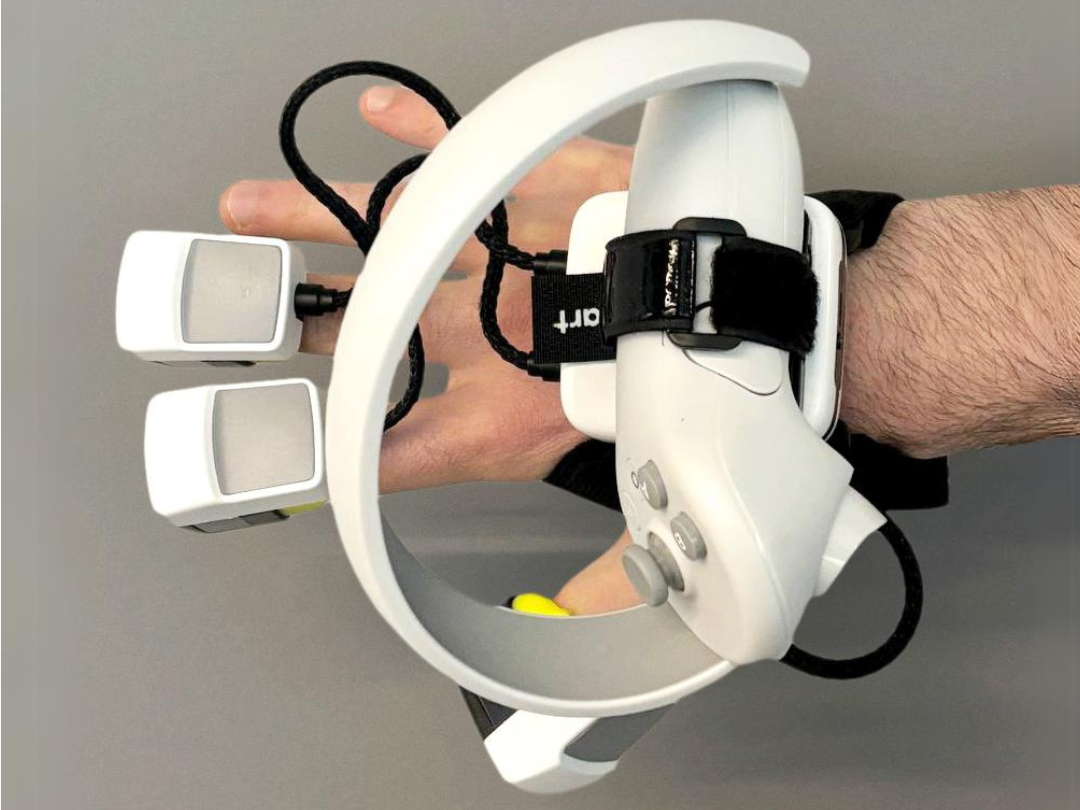

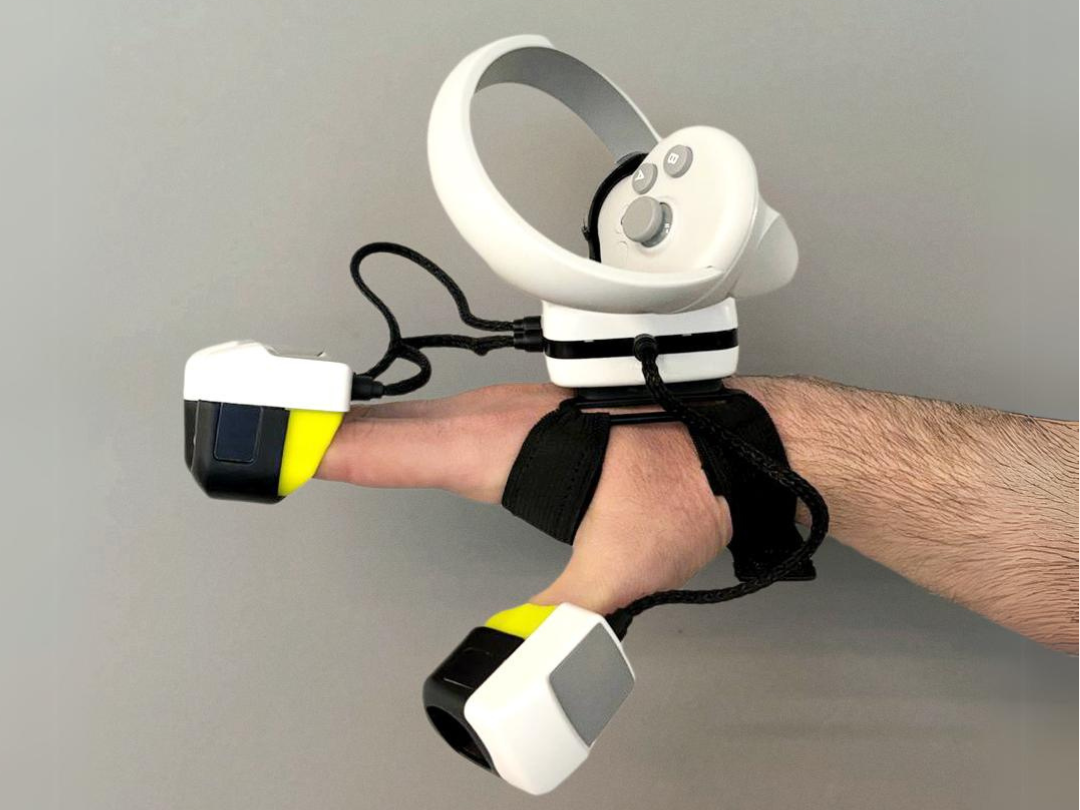

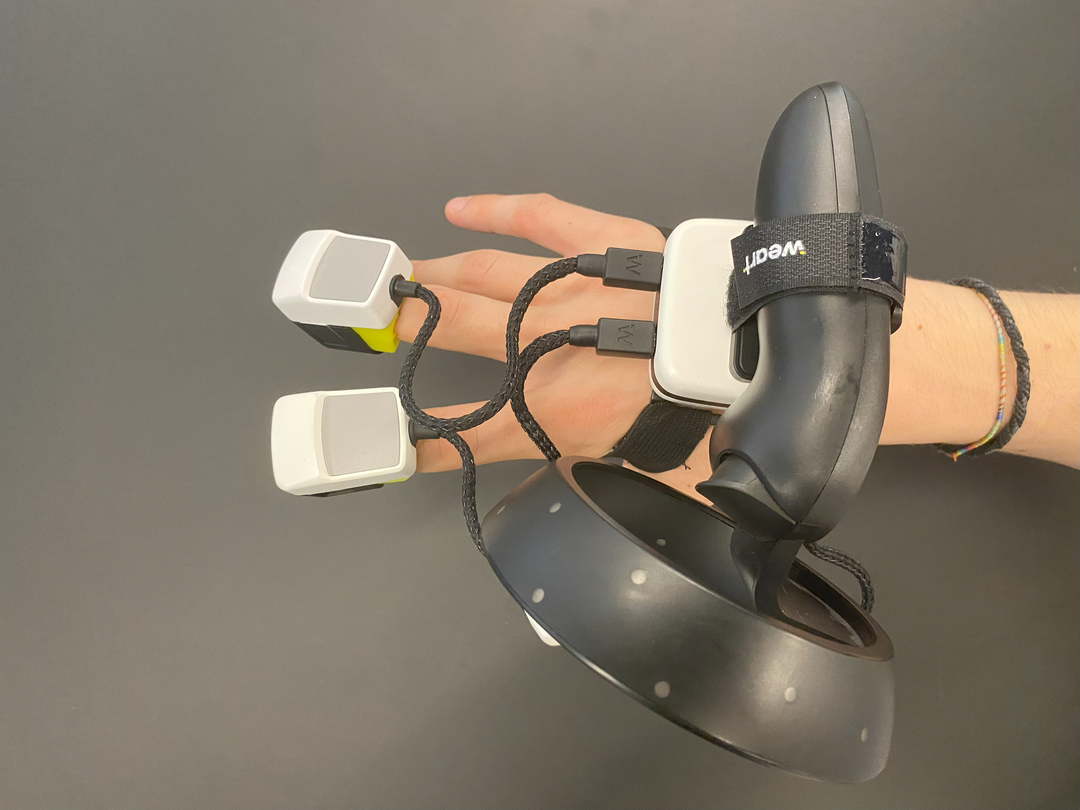

A photo that demonstrates how to properly wear the TouchDIVER is shown below.

Finger Tracking algorithm provides movement detection for VR experiences. To move individual fingers of the virtual hand, our tracking algorithm will be launched along with an experience that uses the Weart SDK.

There is a starting position that enables the algorithm to be initiated in the proper manner, which is shown in the following image.

The left hand works exactly the same, all mirrored symmetrically.

Start Calibration button from Middleware User Interface.Start Calibration button.In a 3D environment, we need an external system to determine the position. Therefore to use hand movements tracking in a VR experience it is essential to use a VR controller that can accurately determine position in the environment thanks to its optical sensors.

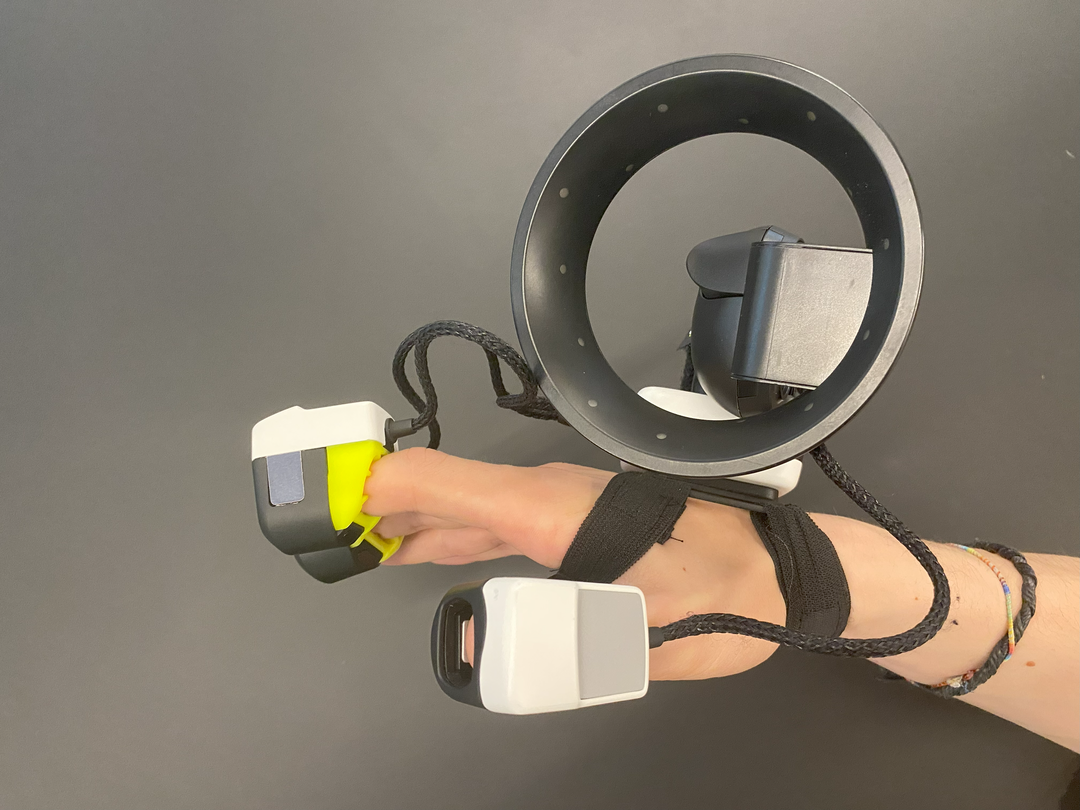

The multiple controllers that the TouchDiver is compatible with are shown below.

In addition to its versatile compatibility with various controllers, it is worth noting that the TouchDiver extends its adaptability beyond boundaries. With seamless integration, it is fully compatible with a wide range of Virtual Reality (VR) and Augmented Reality (AR) systems, enabling users to delve into immersive experiences effortlessly. Moreover, the TouchDiver's exceptional capabilities extend further to include compatibility with all Motion Capture (MoCap) systems, empowering users to capture and translate their physical finger movements with precision and accuracy.

In the above image, we can see the TouchDIVER integrated with the ART tracking system for motion capture, demonstrating the versatility of the TouchDIVER.